Fine-tuning

Fine-tuning is the process of teaching an AI model to understand and respond to user queries. ToothFairyAI allows organisations to build, own and host their own AI models. This document provides an overview of the fine-tuning process.

Menu location

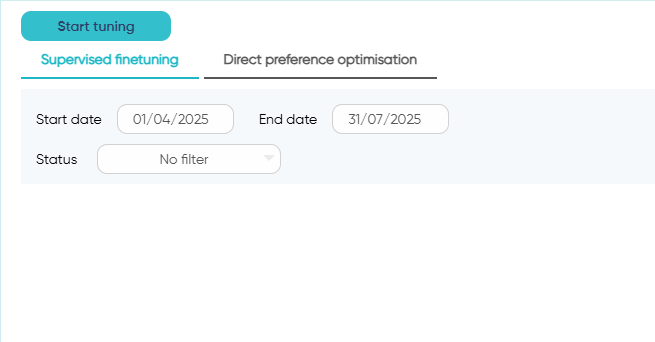

Fine-tuning can be accessed from the following menu:

Fine-tuning > Start tuning

The fine-tuning section consist of the following tabs:

- Supervised finetuning

- Direct preference optimisation

Fine-tuning process

The fine-tuning process consists of the following steps:

- Give a name to the fine-tuning process: The user can give a name to the fine-tuning process. The name of the fine-tuning job will be later used to identify the fine-tuned model in the

Hosting & Modelssection in the agents configuration. - Provide a description: The user can provide a description to the fine-tuning process (optional)

- Filter topics: Similarly to the

Knowledge HubandAgentsconfiguration, ToothFairyAI allows the user to filter the training data based on the topics selected. By default, all topics are selected which means all training data available in the workspace will be used for the fine-tuning process. - Select the model: Selection of the model to be fine-tuned. The selection influences both the dataset and the model output. The user can select from the following table:

| Model | Description |

|---|---|

| Deepseek R1 - Llama 3.3 70B Distil | Llama 3.3 70B Distil model from Deepseek |

| Deepseek R1 - Qwen 1.5B Distill | Qwen 1.5B Distill model from Deepseek |

| Deepseek R1 - Qwen 14B Distill | Qwen 14B Distill model from Deepseek |

| Llama 3.3 70B | Llama 3.3 70 billion parameter model |

| Llama 3.2 1B | Llama 3.2 1 billion parameter model |

| Llama 3.2 3B | Llama 3.2 3 billion parameter model |

| Llama 3.1 70B | Llama 3.1 70 billion parameter model |

| Llama 3.1 8B | Llama 3.1 8 billion parameter model |

| Qwen 2.5 14B | Qwen 2.5 14 billion parameter model |

| Qwen 2.5 72B | Qwen 2.5 72 billion parameter model |

- Dataset only:

Starter,ProandBusinessworkspaces can only generate a downloadable datasets both for training and evaluation.Enterprisesubscription can fine-tune the model and associate the fine-tuned model with the agents of their choice.

Both Llama 3.3 and Llama 3.1 models support tool calling and planning capabilities. Therefore, you can finetune not only text generation but also tooling such as API calls, DB queries and planning tasks!

In other words you can deeply customise even Orchestrator agents to suit your use cases!

Fine-tuning configuration parameters

When configuring your fine-tuning job, you'll encounter several technical parameters. Understanding these parameters helps you optimize the training process for your specific use case.

Learning rate (%)

Default: 0.0001

The learning rate controls how quickly the model adapts to your training data. Think of it as the "step size" the model takes when learning.

- Lower values (e.g., 0.00001): More cautious learning, slower but more stable training. Recommended for large models or when you want to preserve the model's existing capabilities.

- Higher values (e.g., 0.001): Faster learning but may cause instability or "forgetting" of the base model's knowledge.

Learning rate peak ratio

Default: 0

This parameter controls the learning rate warmup schedule. When set to 0, no warmup is applied.

- Value of 0: Learning rate stays constant throughout training.

- Positive values (e.g., 0.1): The learning rate will increase from a lower starting point to the peak value, then potentially decrease. This helps stabilize early training.

Learning rate warmup ratio

Default: 0

Determines what fraction of training steps are used for learning rate warmup.

- Value of 0: No warmup period.

- Example value of 0.1: The first 10% of training steps gradually increase the learning rate from near-zero to the target learning rate.

This prevents large gradient updates early in training that could destabilize the model.

Min dataset for training test

Default: 200

The minimum number of examples required in your dataset to proceed with fine-tuning.

- This safety check ensures you have enough data for meaningful training.

- If your dataset has fewer examples than this threshold, the system will prevent training to avoid poor results.

Test dataset size (%)

Default: 0.1 (10%)

The percentage of your dataset reserved for evaluation (not used for training).

- 10% (0.1): Recommended default. For a 1,000-example dataset, 100 examples are used for testing, 900 for training.

- Lower values (e.g., 0.05): More data for training, less for evaluation. Use when you have limited data.

- Higher values (e.g., 0.2): More robust evaluation but less training data.

Number of evaluations

Default: 20

How many times during training the model's performance is evaluated on the test dataset.

- More evaluations (e.g., 50): Better tracking of training progress, helps identify overfitting early.

- Fewer evaluations (e.g., 5): Faster training but less insight into model performance during training.

Max epochs

Default: 20

An epoch is one complete pass through your entire training dataset. This sets the maximum number of passes.

- More epochs (e.g., 50): Model sees the data more times, potentially better learning but higher risk of overfitting.

- Fewer epochs (e.g., 3-5): Faster training, lower risk of overfitting but may not fully learn the patterns.

The training may stop early if the model converges before reaching max epochs.

Number of checkpoints

Default: 1

Checkpoints are saved snapshots of your model during training.

- 1 checkpoint: Only the final model is saved (most common).

- Multiple checkpoints (e.g., 5): Saves intermediate versions. Useful if you want to compare different training stages or if training might be interrupted.

Batch size

Default: 8

The number of training examples processed together in one training step.

- Larger batches (e.g., 32): More stable gradient estimates, faster training on powerful hardware, but requires more memory.

- Smaller batches (e.g., 2-4): Less memory required, can work on smaller GPUs, but may have more noisy training.

Batch size 8 is a balanced default for most use cases.

Rank for LoRA adapter weights

Default: 8

LoRA (Low-Rank Adaptation) is an efficient fine-tuning technique. The rank controls the capacity of the adaptation.

- Lower rank (e.g., 4): Fewer trainable parameters, faster training, smaller fine-tuned models, but less adaptation capacity.

- Higher rank (e.g., 16-32): More trainable parameters, can capture more complex patterns, but slower and requires more memory.

Rank 8 is optimal for most applications.

Alpha value for LoRA adapter

Default: 8

The alpha parameter scales the LoRA updates and works together with the rank parameter.

- Same as rank (e.g., 8): Standard configuration for balanced adaptation.

- Higher than rank (e.g., 16 for rank 8): Stronger LoRA influence on the base model.

- This ratio controls how much the LoRA adaptation influences the base model's behavior.

Dropout for LoRA adapter

Default: 0.1

Dropout randomly deactivates a percentage of neurons during training to prevent overfitting.

- 0.1 (10%): Standard dropout rate, provides good regularization for most datasets.

- Lower values (e.g., 0.05): Less regularization, use when you have limited training data.

- Higher values (e.g., 0.2-0.3): More aggressive regularization, helps prevent overfitting on large datasets.

- 0: No dropout, may lead to overfitting but can be useful for very small datasets.

Select LoRA layers

Choose which specific model layers to apply LoRA fine-tuning to. By default, common layer types are selected based on the model architecture.

Available layer types (depending on the selected model):

- k_proj: Key projection layers (used in attention mechanism)

- v_proj: Value projection layers (used in attention mechanism)

- q_proj: Query projection layers (used in attention mechanism)

- o_proj: Output projection layers (used in attention mechanism)

- up_proj: Up-projection layers (used in feed-forward networks)

- gate_proj: Gate projection layers (used in gated feed-forward networks)

- down_proj: Down-projection layers (used in feed-forward networks)

Selection strategies:

- All attention layers (k_proj, v_proj, q_proj, o_proj): Standard approach for fine-tuning, focuses on how the model processes relationships between tokens. Recommended for most use cases.

- Add feed-forward layers (up_proj, down_proj, gate_proj): More comprehensive fine-tuning that includes how the model transforms information. Use for complex domain adaptation.

- Selective layers: Choose specific layers to reduce memory usage and training time while targeting particular model behaviors.

When to customize:

- Memory constraints: Select fewer layers (e.g., only q_proj and v_proj) to reduce GPU memory requirements.

- Faster training: Fewer layers train faster but may have reduced adaptation capacity.

- Standard use: Keep the default selection which typically includes the key attention layers.

Train on inputs

Options: Auto / True / False (Default: Auto)

Controls whether the model is trained to predict both the input (prompt) and output (response) or only the output.

- Auto (Recommended): System automatically determines the best setting based on your dataset and use case. This intelligently analyzes your data and selects the optimal training approach.

- True: Model learns to predict both user inputs and assistant responses. This can improve contextual understanding and is useful when you want the model to learn the style and structure of both questions and answers.

- False: Model only learns to predict assistant responses (the standard supervised fine-tuning approach). The model focuses solely on generating appropriate outputs given inputs.

When to use each option:

- Use Auto for most cases unless you have specific requirements.

- Use True if you want the model to learn conversational patterns and input formatting.

- Use False for standard instruction-following fine-tuning where only response quality matters.

For most use cases, the default values provide a good starting point. Consider adjusting these parameters if:

- You have a very small dataset (< 500 examples): Reduce epochs to 3-5 to avoid overfitting

- You have a very large dataset (> 50,000 examples): You may increase batch size and epochs

- Training is unstable: Reduce learning rate by 10× (e.g., to 0.00001)

- Training is too slow: Increase batch size if memory allows

Fine-tuning completion

Once the fine-tuning process is completed, the user can download the fine-tuned dataset and model weights depending on the subscription plan. Regardless of the subscription type, the resulting datasets are formatted in a universal format that can be used for fine-tuning any model later on.

For enterprises ToothFairyAI allows the selection of even more base models (e.g. Mistral/Mixtral/DeepSeek) upon request.

For Enterprise workspaces we can enable upon request the finetuning of the models available to the agents in the platform - see here

Fine-tuning limitations

Based on our experience, fine-tuning the model with a dataset of less than 100 well curated examples will not yield the desired results. We recommend using a dataset of at least 1000 examples for fine-tuning the model. The process can take quite some time, depending on the size of the dataset, the number of fine-tuning epochs and the size of the model. The user will be notified when a fine-tuning job is completed via email.